In brief we:

Help protect younger children from sexual abuse.

Every 2 minutes our analysts in Cambridge remove a photo online of a child suffering sexual abuse.

As one of the world’s leading organisations fighting online child sexual abuse we rely on the generous support of members of the public, charitable giving bodies and the business community. Your support will help us continue and increase our vital work helping these victims.

We’ve deliberately scaled up our tech team to enhance the efforts of analysts in our Hotline.

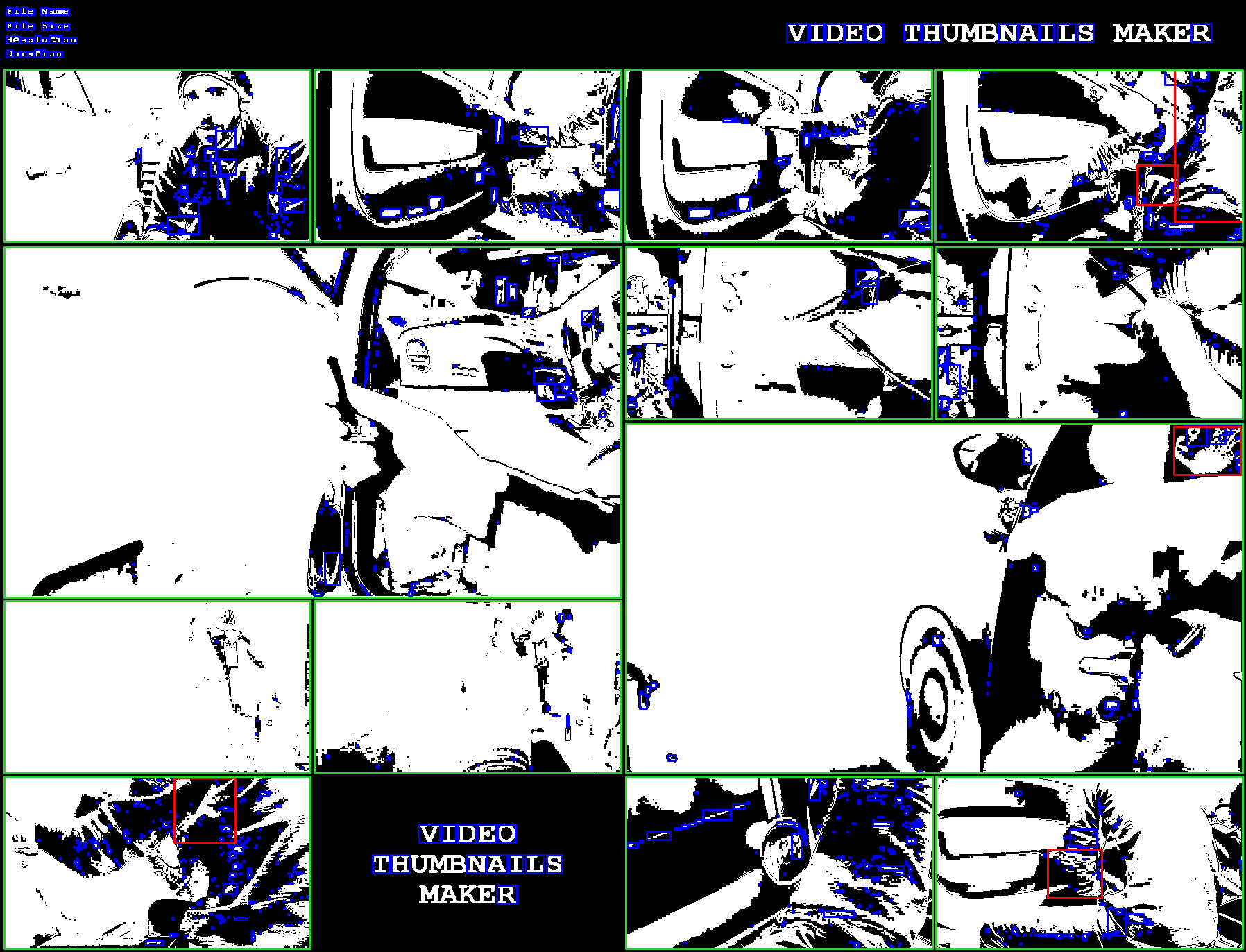

One of our challenges we’re tackling is around the recurring issue of ‘grid images’ in the hashing process. This process creates hashes, or digital fingerprints, of child sexual abuse images and videos that can be used to identify and block the images online.

Grid images, however, are notorious for causing perceptual hash collisions, which means that the perceptual hashes from grids will sometimes match images of simple repeating patterns.

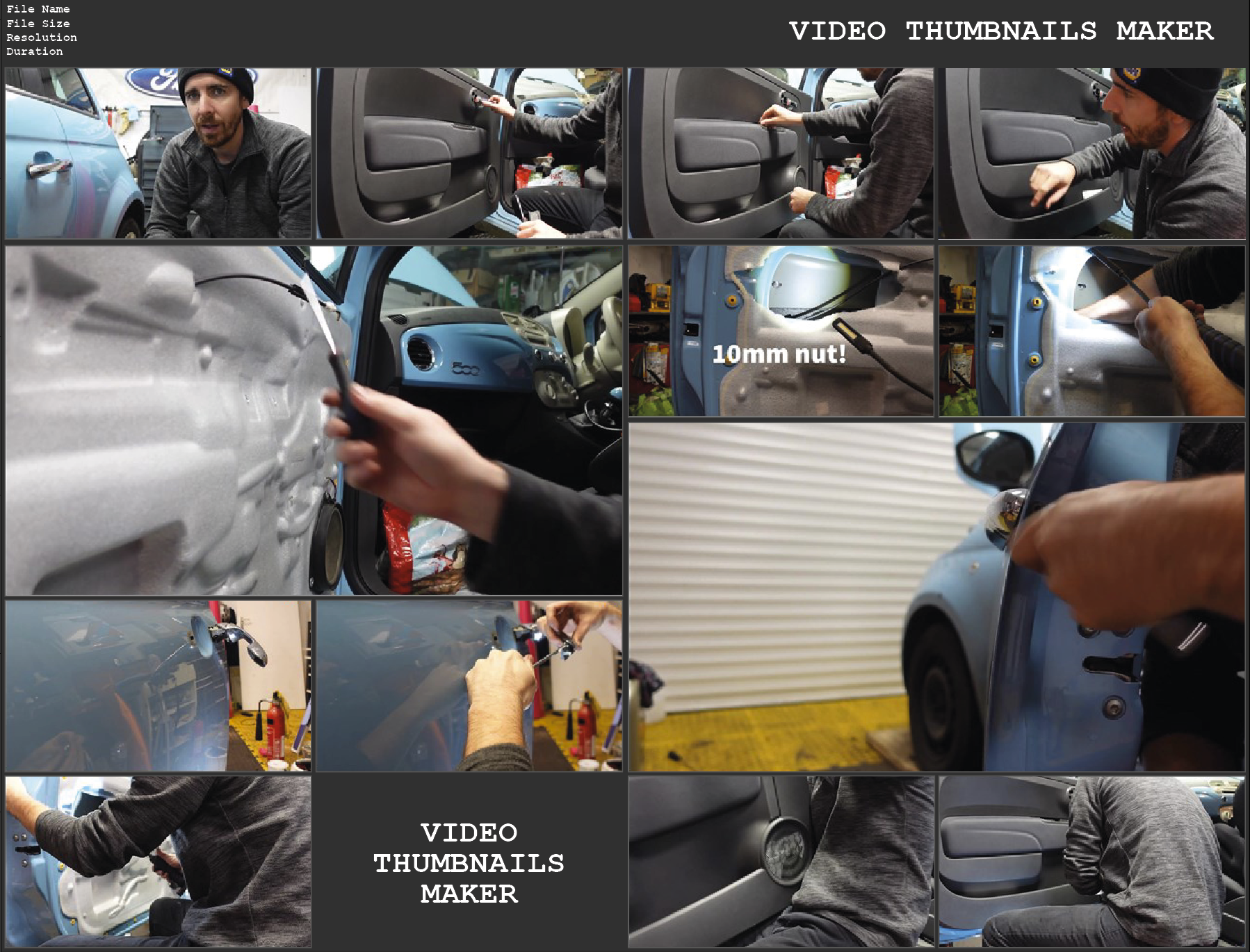

A grid is a particular type of preview image used by offenders to advertise and make money from selling child sexual abuse videos online. Offenders create the grid images from video frames of the criminal content and post them on the internet along with a link to entice buyers to a premium file sharing service. These services require a subscription for users to access and download the full video.

You can read more about commercial child sexual abuse imagery here.

Grids can constitute up to 40% of the images we process in reports at any given point in time. But most of the tech companies who use our data to block child sexual abuse imagery exclude grid image hashes from searches because of the chance that they might clash with another image.

While there is no standard layout, background colour, sub-image size or software used to generate grids, we established that collisions are more likely to occur when a 7×7 grid or higher of sub-images is used.

To handle grids in an automated fashion, we created a process that can detect the background and separate the images back into the constituent frames from the video for perceptual hash matching and clustering.

Our testing on synthetic grids and real-world child sexual abuse material has shown this approach to be 95% effective with a 0.2% false positive rate, which is when an image is flagged as matching a grid and extracted erroneously.

The software we have developed so far is too slow to be useful in real-time detection for external tech organisations, but it is suitable for IWF purposes, and we are working with partners internationally to optimise the code.

This means we can now:

For a similar area of our work, we use DBSCAN clustering with Photo DNA hashes to group very similar images together. This increases our ability to assess very visually similar images, such as simple resizes, and to improve consistency with our assessment process as we can compare assessments made by different analysts for slightly altered copies of the same images. We found assessments based on clusters to be 112% faster than assessing individual images.

Grid images come into play for clustering when it comes to videos. The standard Photo DNA process for videos is to first extract the frames. These can then be assessed as images. The video receives its overall assessment rating based upon the “highest category” assessment of any of the constituent frames. This way the video itself can be graded for the severity of the child sexual abuse it contains. We also account for any frames extracted and circulated individually.

The frames from any given video would form into a Photo DNA cluster much in the same way as copies of the same image would because each frame will be very similar to the next and previous frames. In the case of grids, this means that the extracted sub-images from a grid would merge into the cluster for the source video (if we have it to match against), which can provide vital context for us when assessing images.

For example, the sub-images of a grid may not be obvious child sexual material because the image does not contain enough of the victim to be sure, but when it merges into the existing cluster, the analysts can then see where the grid came from, and the images before and after it in the video, which can often confirm one way or another whether the image is of a child or not.

This makes our work faster, more accurate, and closes the loopholes which criminals have tried to exploit in their making and sharing of child sexual abuse images and videos.